|

Email: liangtong39 [at] gmail [dot] com I am a senior security machine learning researcher at stellarcyber.ai working on AI for threat detection and response. Before this, I was a researcher in the Data Science & System Security Department at NEC Labs America where I worked on trustworthy machine learning and time series analysis. I received my Ph.D. in CS from WashU with the Turner Dissertation Award (under supervision of Yevgeniy Vorobeychik), my M.S. in CS from Vanderbilt, and my M.Eng. & B.S. in communication engineering from UESTC. We are actively hiring researchers, research engineers, and interns. Details can be found here. |

|

|

I am passionate about 1) AI for security applications and 2) understanding when/why AI fails and how to prevent failures (trustworthy AI). Much of my work lies at the intersection of machine learning, artificial intelligence, and computer security. More specifically, I am interested in using machine learning to improve system and network security, and improving security of the machine learning models themselves in adversarial settings. I have also worked on mobile cloud computing, in which I designed architectures and transmission schemes for offloading mobile AI applications to edge cloud. |

|

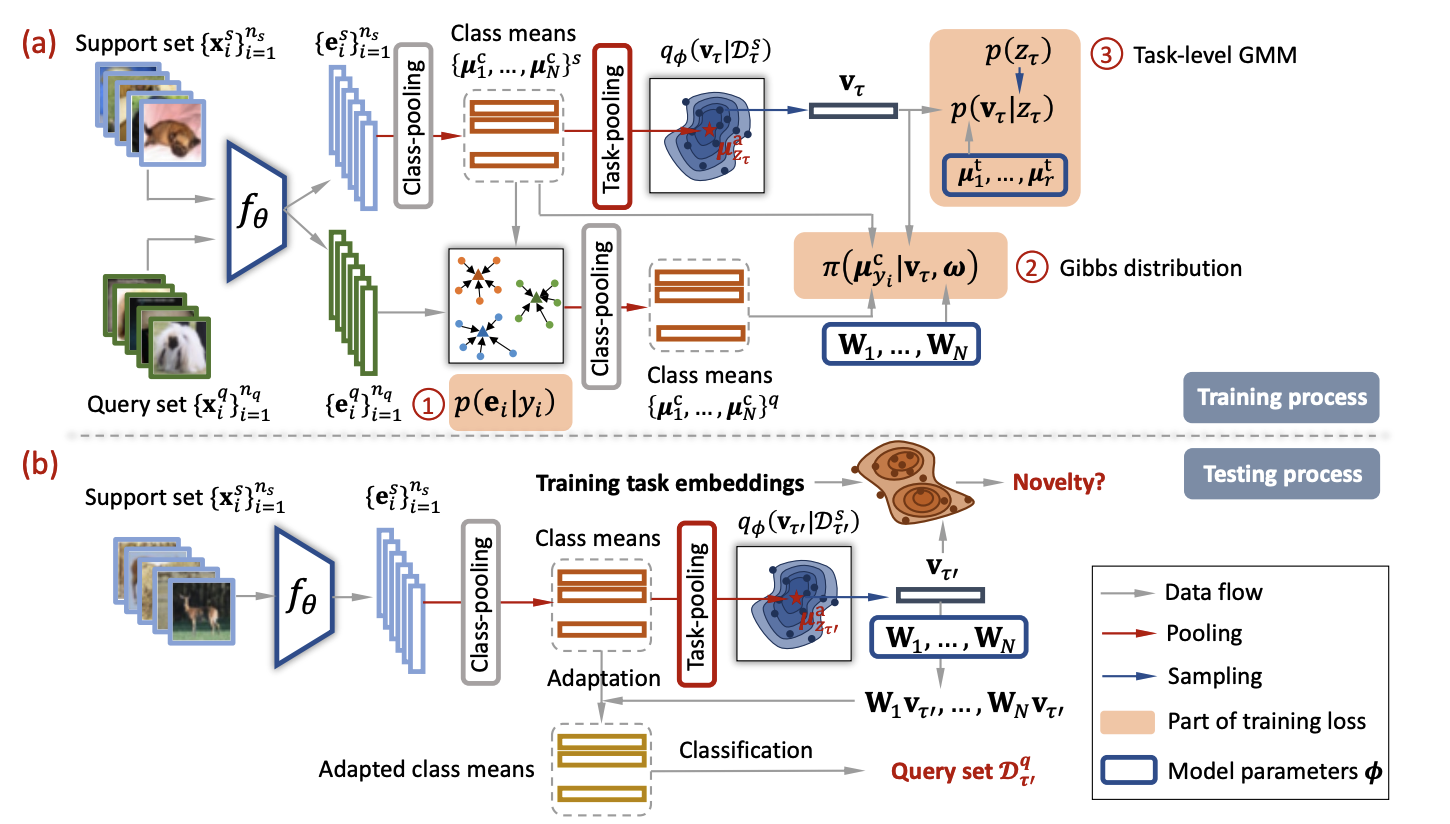

Yizhou Zhang, Jingchao Ni, Wei Cheng, Zhengzhang Chen, Liang Tong, Haifeng Chen, Yan Liu NeuraIPS, 2023 bibtex A meta training framework underlain by a novel hierarchical Gaussian Mixture based task generative model. |

|

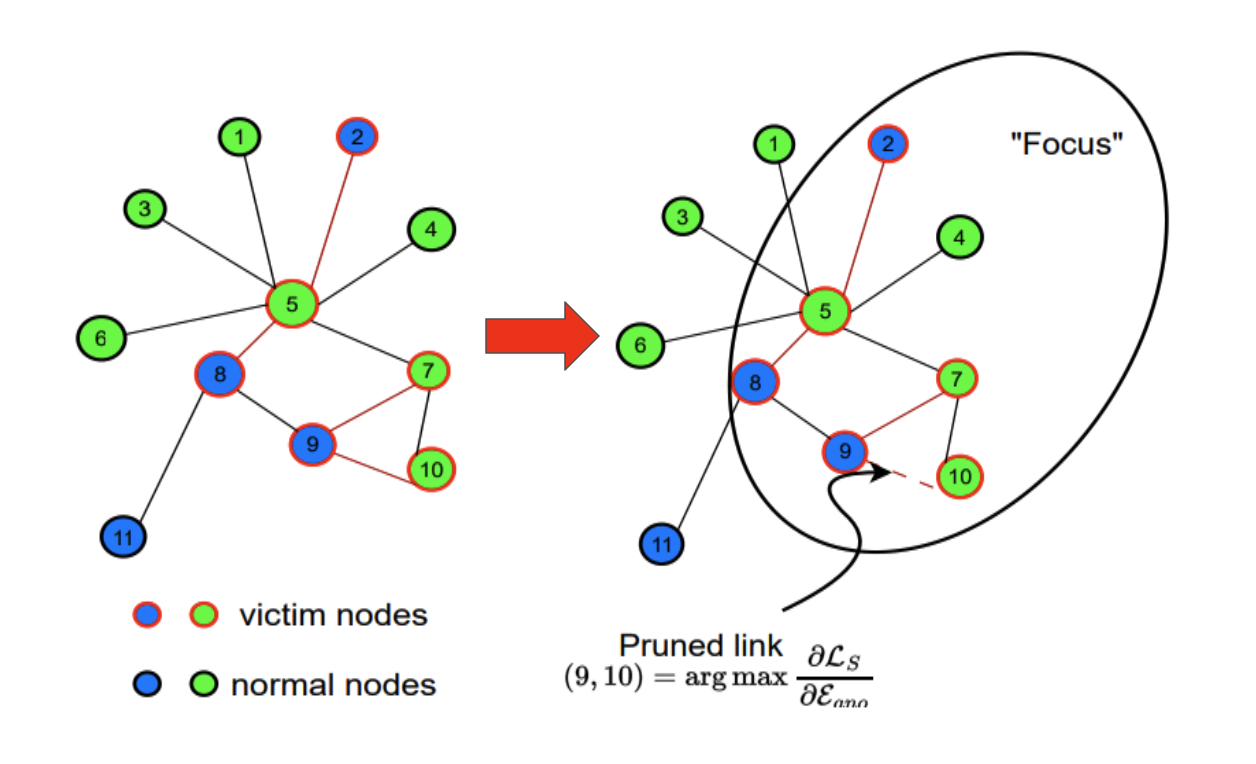

Yulin Zhu, Liang Tong, Gaolei Li, Xiapu Luo, Kai Zhou IEEE Transactions on Knowledge and Data Engineering, 2023 bibtex A poisoned graph sanitizer which effectively identifies the poison injected by attackers. |

|

Dongjie Wang, Zhengzhang Chen, Jingchao Ni, Liang Tong, Zheng Wang, Yanjie Fu, Haifeng Chen KDD, 2023 bibtex A GNN framework for causal discovery and root cause localization. |

|

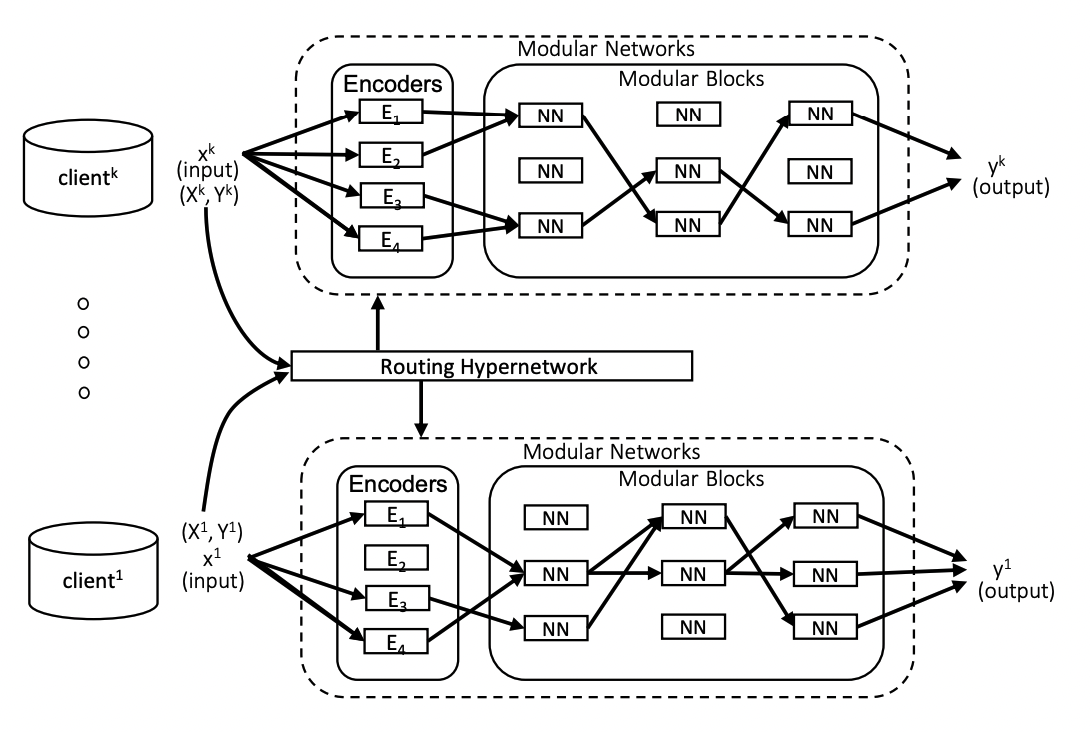

Tianchun Wang, Wei Cheng, Dongsheng Luo, Wenchao Yu, Jingchao Ni, Liang Tong, Haifeng Chen, Xiang Zhang ICDM, 2022 bibtex A personalized federated learning framework for heterogeneous clients. |

|

Liang Tong Doctoral dissertation, Washington University in St. Louis, 2021 (Turner Dissertation Award, the best Ph.D. thesis of WashU CS) bibtex A systematic study on adversarial evaluation, defense, and deployment of ML systems. |

|

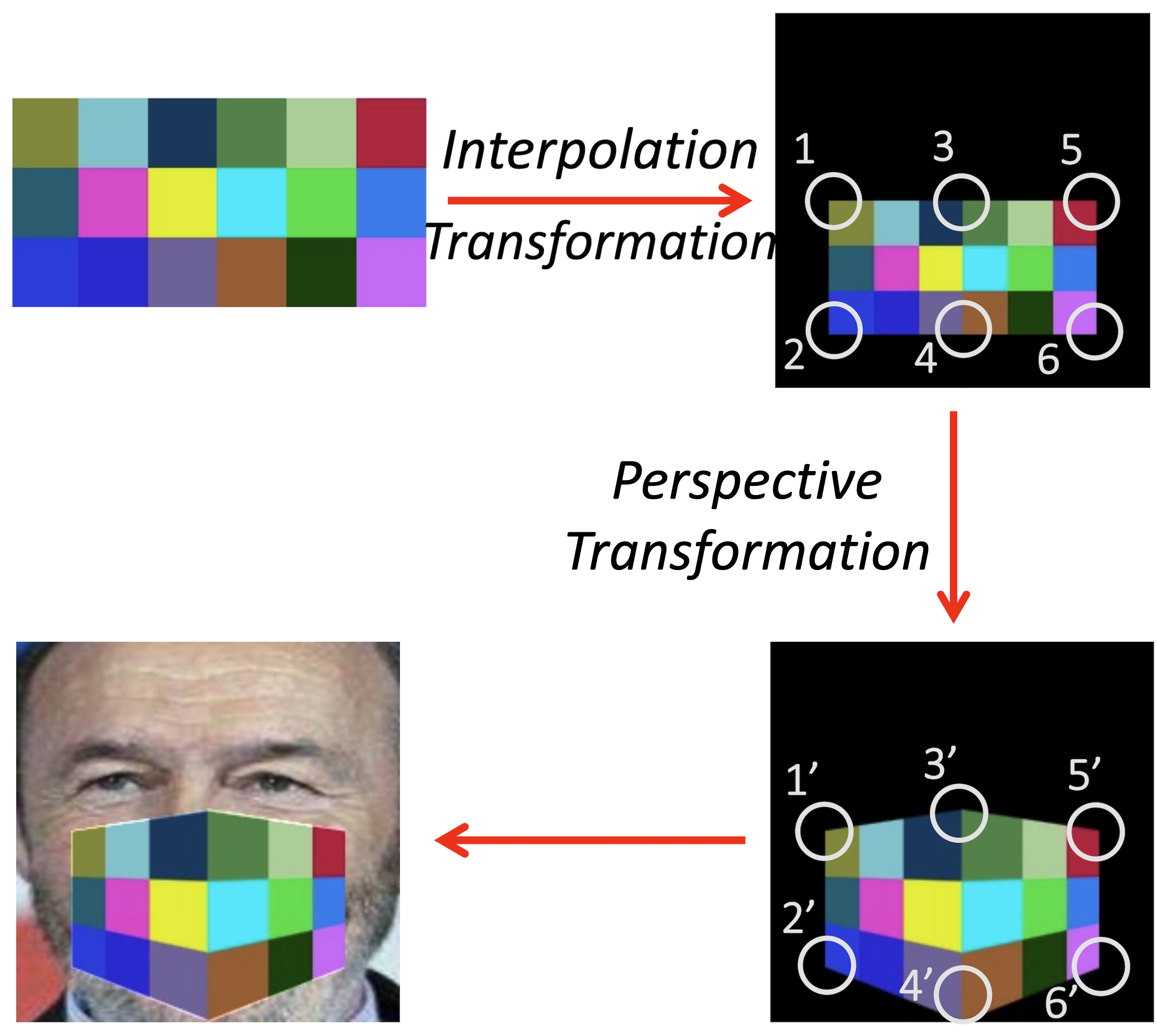

Liang Tong, Zhengzhang Chen, Jingchao Ni, Dongjin Song, Wei Cheng, Haifeng Chen, Yevgeniy Vorobeychik CVPR, 2021 supplement / arXiv / code / video / bibtex A framework for systematizing adversarial evaluation of face recognition systems. |

|

Tong Wu, Liang Tong, Yevgeniy Vorobeychik ICLR, 2020 (Spotlight Presentation) arXiv / code / video / bibtex Is robust ML really robust in the face of physically realizable attacks on image classifications? This paper provides a rethinking of adversarial robustness. |

|

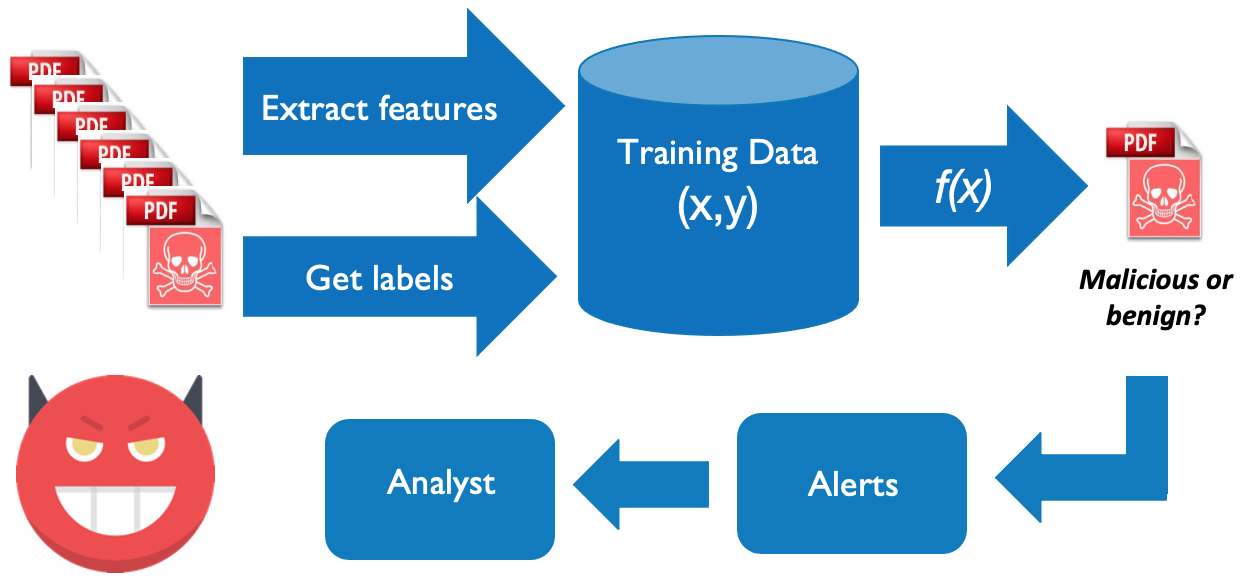

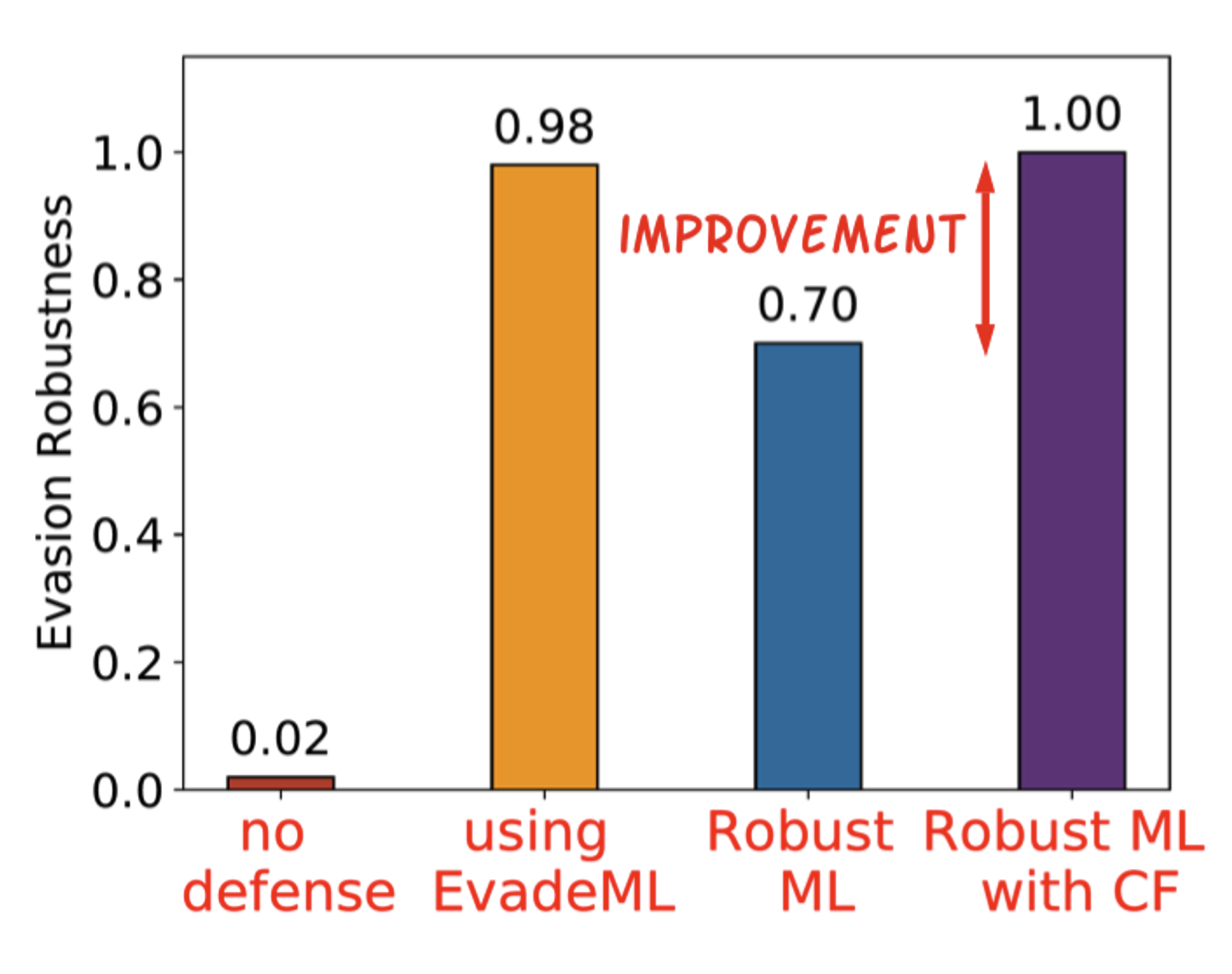

Liang Tong, Aron Laszka, Chao Yan, Ning Zhang, Yevgeniy Vorobeychik AAAI, 2020 arXiv (long version) / code / bibtex A robust ML model is not the end of the story. We need to deal with the false-positive alerts raised by ML models. |

|

Liang Tong, Bo Li, Chen Hajaj, Chaowei Xiao, Ning Zhang, Yevgeniy Vorobeychik USENIX Security, 2019 arXiv / code / video / bibtex Is robust ML really robust in the face of real attacks on malware classifiers? If no, how to bridge the gap? |

|

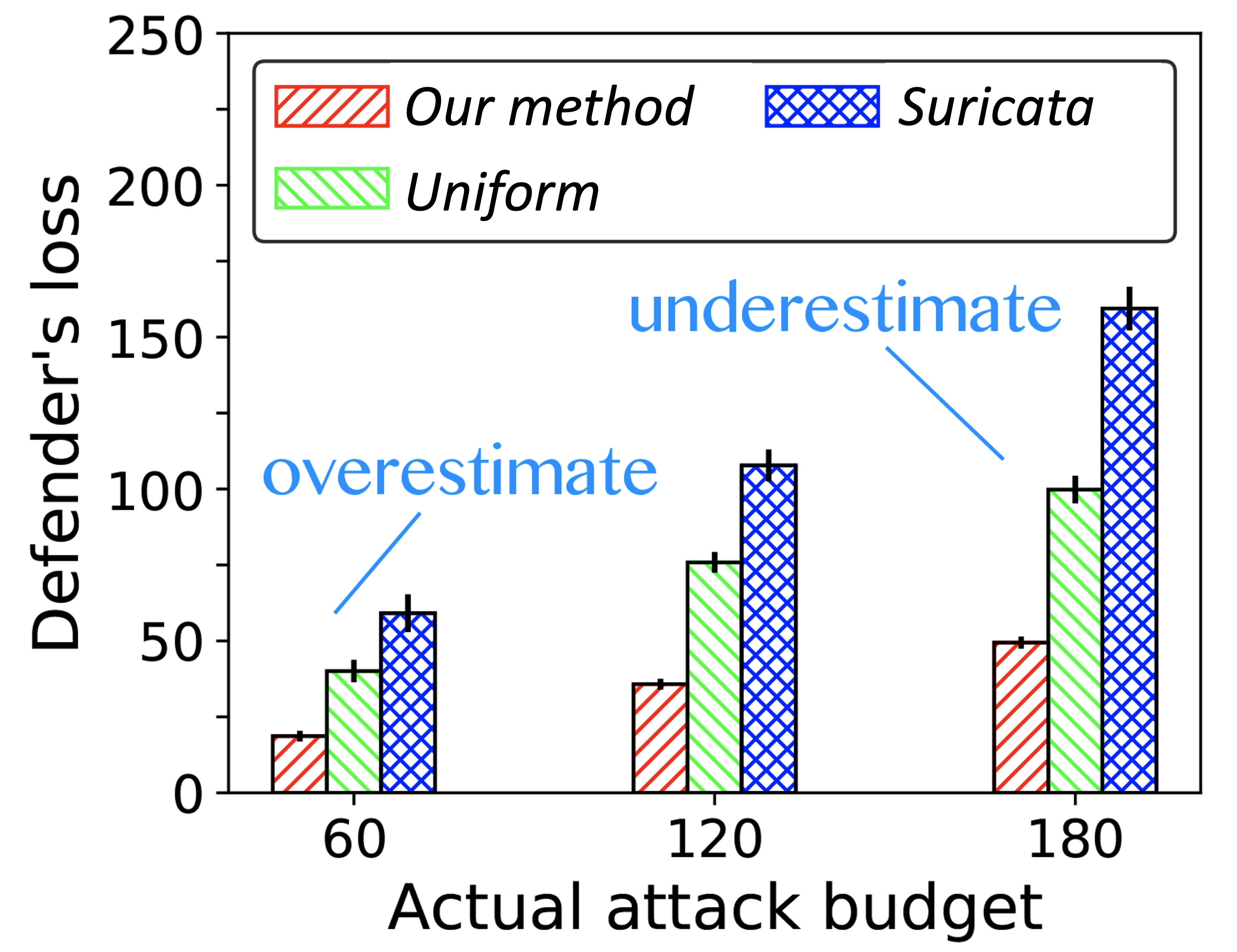

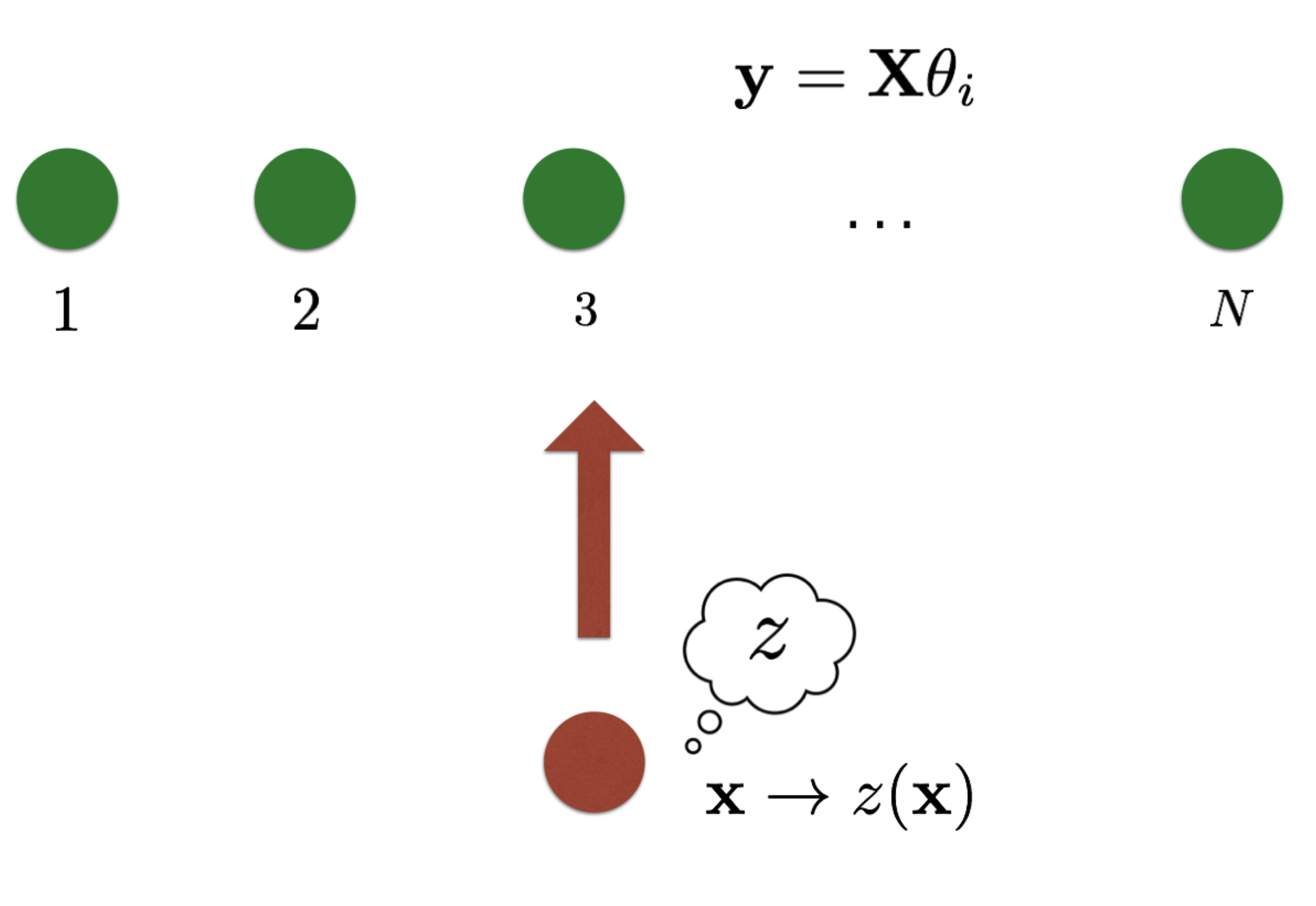

Liang Tong*, Sixie Yu*, Scott Alfeld, Yevgeniy Vorobeychik ICML, 2018 supplement / arXiv / code / video / bibtex What if there are multiple ML models and a single adversary? What should be their optimal strategies? |

|

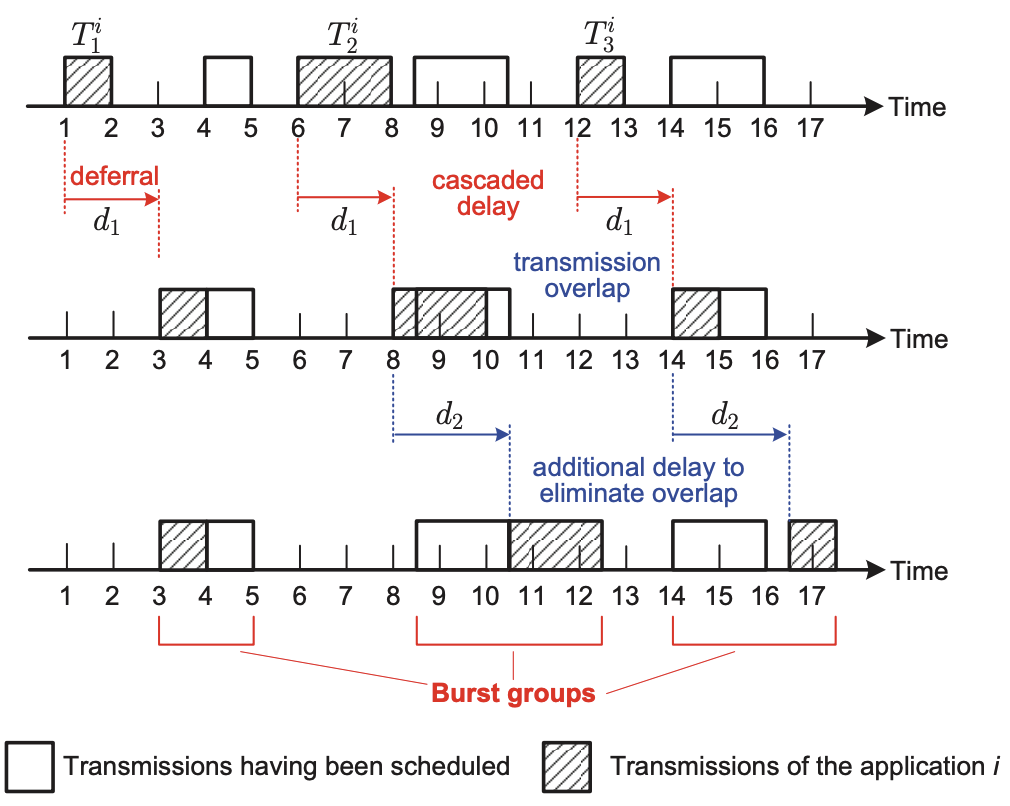

Liang Tong, Wei Gao INFOCOM, 2016 (Best Presentation in Session) slides / bibtex How to adaptively balance the tradeoff between energy efficiency and responsiveness of mobile applications if they are offloaded to edge cloud? |

|

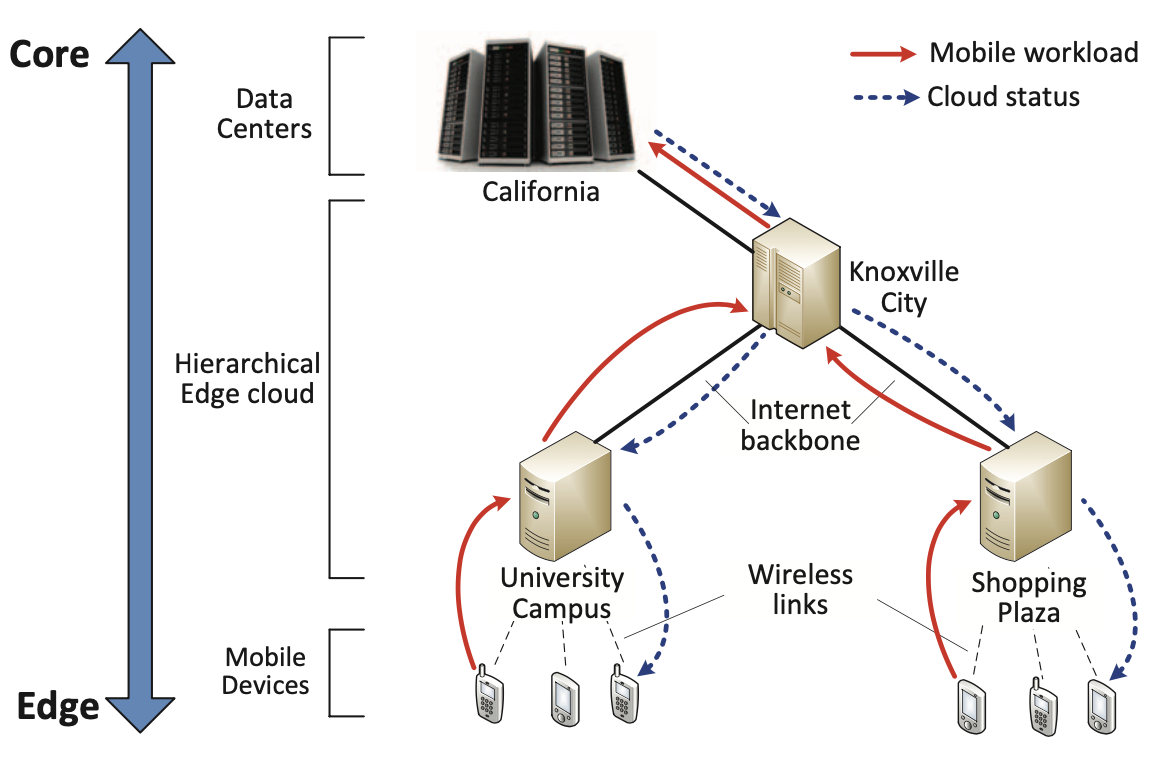

Liang Tong, Yong Li, Wei Gao INFOCOM, 2016 (The 2nd most cited paper at INFOCOM'16) slides / bibtex The first work on hierarchical edge cloud architecture for mobile applications. |

|

|

|

Website credit: Jon Barron |